🤖 Scaling Data and Self-Serve Analytics

Apr 16, 2021 • 5 min • Data Science

When I started my first job as a data scientist, we spent a lot of time thinking a lot about how to scale data analysis across the organization. Even today at Hugo, where we are early enough that we don't have a data team, I still think about this problem often.

Saying that you are "data-driven" is one thing, but answering questions like "What is long-term retention look like for DAUs who engaged with feature X?" isn't always so straightforward.

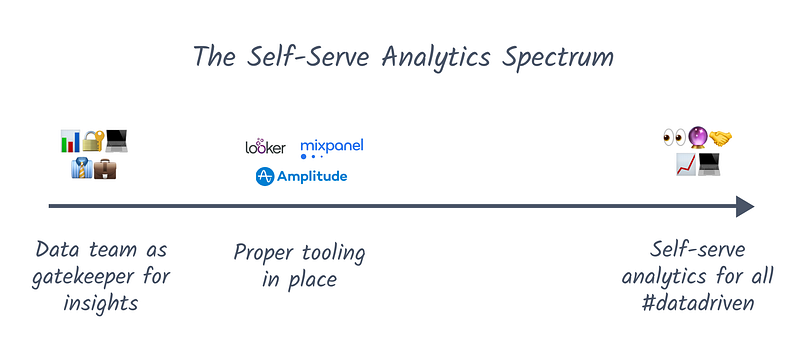

The Spectrum

There is a range of ways that organizations handle this today. On one end of the spectrum is "data team as gatekeeper for insights." This is where someone has a data-oriented question like the prior example, so they either send a Slack message to the data team/person or submit a ticket in a task management tool.

The nice part about this approach is that you can ensure the answer to their question is accurate. The not-so-nice part is that the data team is now a bottleneck across the company and is likely to get bogged down with increasingly monotonous tasks. For these reasons, at a certain scale, almost all teams begin to eventually shift away from this.

And where are they shifting towards? Well, on the other end is "self-serve analytics for all." This is normally implemented using something like Mixpanel, Looker, or Amplitude, but the options are endless. If you're at a large company like Facebook or Airbnb then internal tools can fall into this category as well.

Let's consider our sample question again: "What is long-term retention look like for DAUs who engaged with feature X?" — How would a PM answer this in a "self-serve analytics for all" environment?

They might fire up Mixpanel, head over to the retention tab, filter for DAUs, and then create a cohort for those that triggered the "Engaged with this feature" event. No ticket or work needed from the data team. The PM is happy since they are enabled by data rather than blocked by it. Sounds great, right? Not so fast.

Limitations of Self-Serve Analytics

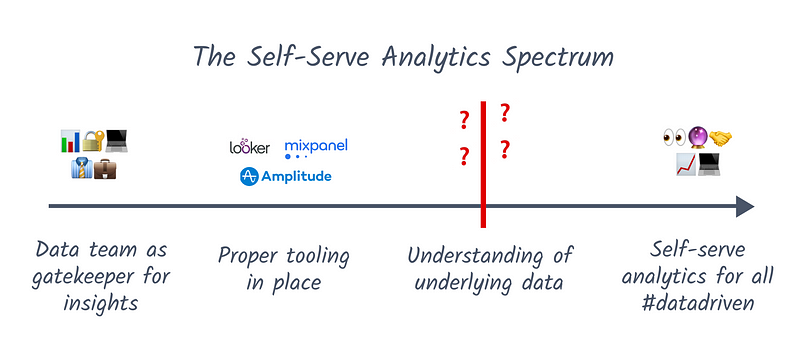

Once you start down this path, you quickly realize that the true bottleneck in enabling self-serve analytics isn't the tooling. It's all about the underlying data:

- Which table to pull from

- What each column means

- Quirks and tracking issues

These are just a handful of the many hiccups that you encounter while working with data. They seem like trivial questions that could be addressed with a little documentation, but you'd be surprised. At the vast majority of companies, this is still tribal knowledge within the data team.

Until you enable a self-serve path to an understanding of the data itself, any self-serve tooling improvements will be at best ineffective and at worst dangerous.

This is the same reason why learning SQL is often an overrated skill for most PMs. Self-serve tools are, at the end of the day, just an interface for SQL. You still need a path to understanding the underlying data. And if that path involves messaging the data team, asking "What does column X mean?" then true "self-serve analytics" are still an aspiration.

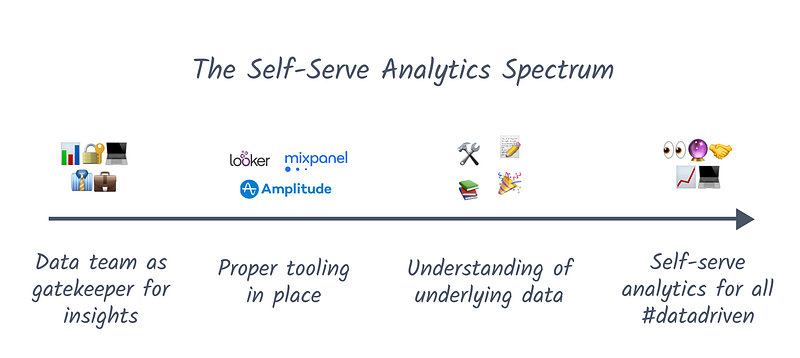

Working Towards a Solution

It takes time and discipline to overcome these limitations and effectively enable self-serve analytics across an organization. In my experience, there isn't a silver bullet, but there are a few lead ones:

- Infrastructure: Invest in the data modeling and engineering layer to increase understandability of data models and events. Reduce duplication. Be opinionated about the source of truth. Stick to a consistent naming convention.

- Documentation: Put in process around documentation for tables, columns, and relevant data quirks to account for. It also helps to have "verification" signaling on tables that are vetted by the data team and can be trusted.

- Education: Take time to help your users learn how to use the tooling and understand how the data is structured. Point out where they can access documentation to answer their questions in the future. Run through examples in real time to ensure understanding.

- Evangelism: When someone gets value out of your self-serve analytics platform, documentation, or infrastructure — Call it out! Celebrate wins both small and large to encourage others in the organization to make analytics a part of their workflow.

Wrapping up

If I sounded skeptical of self-serve analytics in this post, it's because I am! I feel that far too many teams simply hook up BI tools and expect game-changing results. The tooling is just the first step, and in my opinion, often the easiest one.

All that being said, I do believe there's light at the end of the tunnel. There are lots of great new products on the infrastructure and documentation side, as well as leaders promoting education and evangelism around self-serve analytics. We're undoubtedly going places, but it's important to be realistic about the place we are today.

Thanks for reading! If you enjoyed this post and you’re feeling generous, you can perhaps follow me on Twitter. Even better, you can subscribe in the form below to get future posts like this one straight to your inbox. 🔥